CT Logs, Subdomain Enumeration, and Every Vercel App

vercel.app domains. While other users' projects are not visible on Vercel, they are inherently visible on the public internet.

What if there was a way to easily browse other people's Vercel apps?

How can I find every "vercel.app" domain on the internet?

These questions led me down a rabbit hole of Certificate Transparency logs, TLS, and ultimately the creation of almostevery.vercel.app.HTTPS and CT Logs

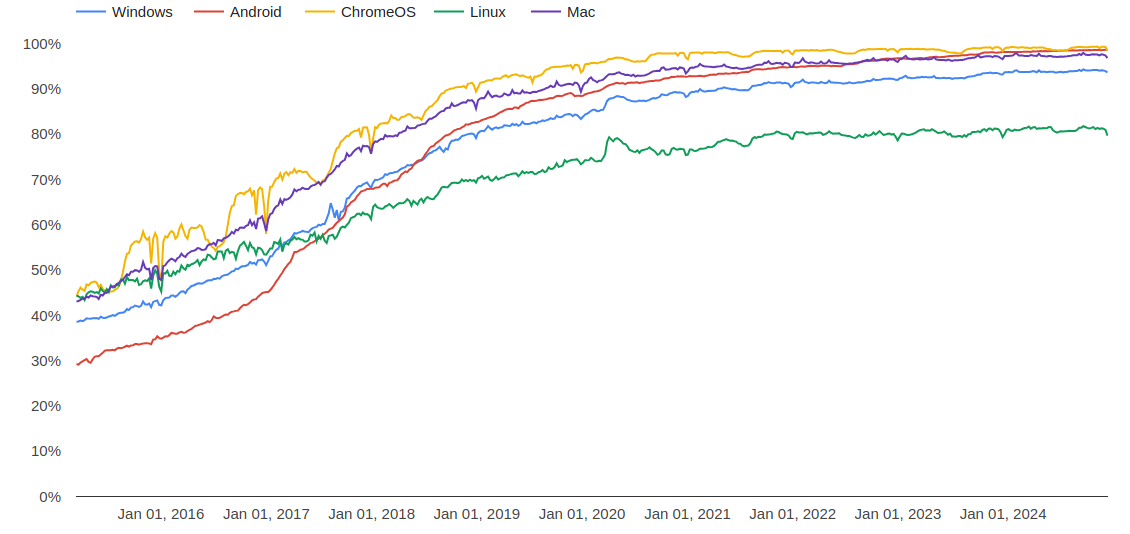

On today's internet, HTTPS encryption is so ubiquitous that that you rarely, if ever, encounter a webpage hosted on HTTP. This wasn't always the case, however. As recently as 2015, more than 50% of Google Chrome traffic was served over unsecure HTTP.

Widespread adoption of HTTPS was driven by several converging factors. Browsers began marking HTTP sites as insecure and Google started to favor HTTPS in search rankings. Meanwhile, cloud services and companies like Let's Encrypt began to streamline the TLS certificate setup and renewal process. These forces, coupled with growing privacy concerns after Snowden's disclosures, transformed HTTPS from a special feature for credit cards and authentication to the default standard for the internet.

One of the lesser known catalysts of this transformation was Certificate Transparency logs. CT logs were established by Google in 2013 and formalized in RFC 6962. The premise of CT logs is that TLS certificates should be public and append-only to allow for better visibility and auditing. Before CT logs, certificate authorities were often the target of cybercriminals looking to issue fradulent public key certificates in order to maliciously intercept traffic. With CT logs, a fradulent certificate will be publicly visible and quickly identified.While this transparency has massively improved internet security, it has created an interesting side effect: every certificate becomes part of the public record. With the proliferation of automated hosting platforms, like Vercel, this means application footprints are often more visible than people might realize.

How CT logs work

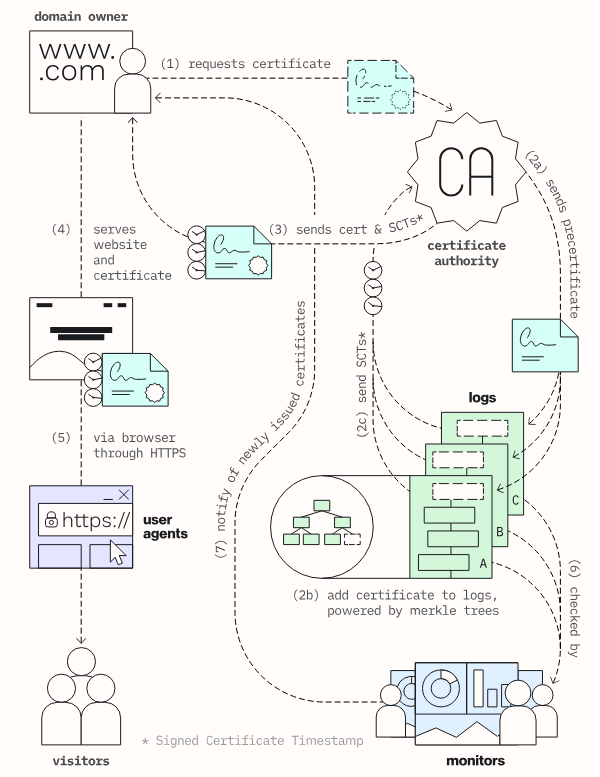

At a high level, there are three main actors in the Certificate Transparency space:

- Logs: Cryptographic record of certificates, maintained by providers like Google, Cloudflare, and Let's Encrypt

- Monitors: Services that ingest CT logs and provide access to CT log data, ran by companies and organizations like crt.sh, facebook, and Censys

- User Agents: Web browsers that check CT logs during TLS handshakes, including Chrome, Safari and Brave

https. Today, Chrome and Safari require at least two SCTs from different CT logs for HTTPS connections.

Today, this entire process is usually abstracted away from end application developers, especially with hosting platforms like Vercel.

For a more detailed breakdown on how CT logs work, I recommend reading

- RFC 6962 — Formal specification from 2013

- certificate.transparency.dev — Official CT docs

- Trillian docs — Merkle tree implementation powering many CT logs

- Cloudflare CT Log Monitoring – Overview of how CT log monitoring works

Finding Every vercel.app

For my use case, I'm effectively doing a point-in-time audit of all the certificates forvercel.app domains, so I went to CT log monitors.

crt.sh

I decided to start with crt.sh, which is an open source CT log monitor that provides a web UI and a public read-only DB. The website allows you to run searches and see the SQL queries that are being executed. For example, when I search for*.vercel.app:

WITH ci AS (

SELECT min(sub.CERTIFICATE_ID) ID,

min(sub.ISSUER_CA_ID) ISSUER_CA_ID,

array_agg(DISTINCT sub.NAME_VALUE) NAME_VALUES,

x509_commonName(sub.CERTIFICATE) COMMON_NAME,

x509_notBefore(sub.CERTIFICATE) NOT_BEFORE,

x509_notAfter(sub.CERTIFICATE) NOT_AFTER,

encode(x509_serialNumber(sub.CERTIFICATE), 'hex') SERIAL_NUMBER,

count(sub.CERTIFICATE_ID)::bigint RESULT_COUNT

FROM (SELECT cai.*

FROM certificate_and_identities cai

WHERE plainto_tsquery('certwatch', $1) @@ identities(cai.CERTIFICATE)

AND cai.NAME_VALUE ILIKE ('%' || $1 || '%')

LIMIT 10000

) sub

GROUP BY sub.CERTIFICATE

)

SELECT ci.ISSUER_CA_ID,

ca.NAME ISSUER_NAME,

ci.COMMON_NAME,

array_to_string(ci.NAME_VALUES, chr(10)) NAME_VALUE,

ci.ID ID,

le.ENTRY_TIMESTAMP,

ci.NOT_BEFORE,

ci.NOT_AFTER,One of the drawbacks of crt.sh is that the results are limited to 10,000 items. If you search for something with more than 10,000 results, you get the following error message:

Sorry, your search results have been truncated.

It is not currently possible to sort and paginate large result sets

efficiently, so only a random subset is shown below.For more advanced queries and larger scale data collection, crt.sh provides some public read only replicas of their DB. Anyone can connect using:

psql -h crt.sh -p 5432 -U guest certwatchMerklemap

I wanted to cross reference these results with another CT log monitor. I decided to use Merklemap, which provides an API to search for subdomains using CT logs. I ran a simple script to paginate through all the results for*.vercel.app from the Merklemap API, and, interestingly, Source of Truth?

The significant difference in results between these two CT log monitors highlights an important limitation: monitors are not a perfect source of truth. While the logs themselves are append-only and cryptographically verifiable, the monitors that aggregate and index the data have their own limitations. I explored several other CT log monitors and found similar limitations for larger scale audits.

Facebook, for example, offers a CT log monitor tool, but this is also limited to 10,000 results. I found a support case from 2021 raising this issue with the internal team at Meta, and it was essentially dismissed. I tried using the Censys search feature for all certificates under*.vercel.app, and I got the following error message:

The query could not be executed in a reasonable time and has been aborted.

See documentation on using the Censys Search Language.Other CT log monitoring tools from companies like Cloudflare, Digicert, and Entrust are designed for their customers to monitor their own domains, rather than providing general access to certificate data at scale.

This makes sense, given that CT logs are massive, often with 100s of millions of certificates. It's expensive and operationally complex to maintain these monitors at scale. Not to mention the technical challenges of ingesting CT logs from a variety of providers, handling network errors gracefully, and providing this data in a highly available production system.

Perhaps there's an opportunity for a more complete monitor designed for larger point-in-time audits instead of continuous monitoring.

Building almostevery.vercel.app

After trying out several different CT log monitors, I decided to cut my losses and go with the domains I collected from crt.sh and Merklemap. I took the set union of the domains from the two monitors and ended up with a text file of ~500,000 unique domains. I then built a basic Next app inspired by everyuuid.com, and pushed the page to Github here.Why Almost Every?

With these results, I accepted that public CT log monitors alone are not sufficient to find every "vercel.app". For this reason–and because every.vercel.app is taken–I decided to name my project "almostevery". Note that while these domains are discoverable through public CT logs, I intentionally chose not to check which domains are currently active to respect Vercel's terms of service.What Next?

Perhaps Vercel should run with this idea and build a feature for users to explore other Vercel apps. This could be a fun feature for the dev community to explore other projects out there that likely wouldn't be discovered otherwise.

Regardless, almostevery.vercel.app should serve as a reminder that everytime we use HTTPS for our webpages, we are opting into a system of Certificate Transparency for all our domains and subdomains. While they may not always be easy to find, they are permanently documented and discoverable on the public internet. This transparency ultimately helps make the web more secure by ensuring certificates can be monitored and verified by everyone.